Objective: Install Oracle 11g Grid Infrastructure on 64 bit Oracle Linux Virtual Machine running in VMware ESXi 5.5 for Oracle 11g RAC database

Pre-Requisites:-

a. Download Oracle 11.2.0.3 software from Oracle

b. I have already created 2 nodes (Virtual Machines) running 64 bit Oracle Linux on ESXi host

c. Downloaded 11g Grid Infrastructure files and created a shared disk

Start the Install:-

1) Make sure both nodes are up. In node1.babulab as "oracle" user, navigate to the location where the grid infrastructure software is extracted and start Grid Infrastructure install:

[oracle@node1 ~]$ cd /home/oracle/sw/grid

[oracle@node1 grid]$ ./runInstaller

Starting Oracle Universal Installer...

Checking Temp space: must be greater than 120 MB. Actual 9241 MB Passed

Checking swap space: must be greater than 150 MB. Actual 4031 MB Passed

Checking monitor: must be configured to display at least 256 colors. Actual 16777216 Passed

Preparing to launch Oracle Universal Installer from /tmp/OraInstall2014-02-19_05-53-05PM. Please wait ...

2) You will see the below screen once the installer begins, I chose to skip the software update and clicked next and ignore the warnings.

1) Make sure both nodes are up. In node1.babulab as "oracle" user, navigate to the location where the grid infrastructure software is extracted and start Grid Infrastructure install:

[oracle@node1 ~]$ cd /home/oracle/sw/grid

[oracle@node1 grid]$ ./runInstaller

Starting Oracle Universal Installer...

Checking Temp space: must be greater than 120 MB. Actual 9241 MB Passed

Checking swap space: must be greater than 150 MB. Actual 4031 MB Passed

Checking monitor: must be configured to display at least 256 colors. Actual 16777216 Passed

Preparing to launch Oracle Universal Installer from /tmp/OraInstall2014-02-19_05-53-05PM. Please wait ...

2) You will see the below screen once the installer begins, I chose to skip the software update and clicked next and ignore the warnings.

3) Select "Install and Configure Oracle Grid Infrastructure for a Cluster" in the install options screen:-

4) Choose "Advanced Installation" and I selected English in the next screen

5) Provide the Cluster name, SCAN name and Port

6) Click "Add" to add the 2nd node:-

7) Provide the Public and Virtual Hostname for node2 and click OK

8) Click the "SSH Connectivity..." button:-

9) Enter the password for the "oracle" user. Click the "Setup" button to configure SSH connectivity, and the "Test" button to test it once it is complete. Then press "Next".

10) In the Network Interface Usage screen, verify if the correct subnets and interface types are selected for the interface names.

11) In the Storage Option, select Oracle ASM

12) Provide name for the ASM Disk Group, Select "External", "Candidate Disks" and click "Change Discovery Path"

13) Provide the path (/dev/oracleasm/disks) where shared disk is located (that we created previously) and click OK.

14) Now it will discover the shared disk, select it and click next.

15) I chose to use same password for both SYS and ASMSNMP. Specify and confirm ASM password, if the complexity of password is something simple like mine, it will throw a warning message like below, acknowledge that and click next:-

Click Yes

16) I chose not to use Intelligent Platform Management Interface (IPMI):-

17) Below are my default options for OS groups, kept it as it is and clicked next.

Click Yes

18) Specify the Oracle Base and Software Location and click next:

19) Specify the Inventory Location and click next:

Performing Prerequisite checks

20) You will receive these warnings (1st one is a bug, 2nd one is because we are not using DNS/GNS to resolve SCAN).

21) Check the "Ignore All" and click next.

Click Yes to below warning

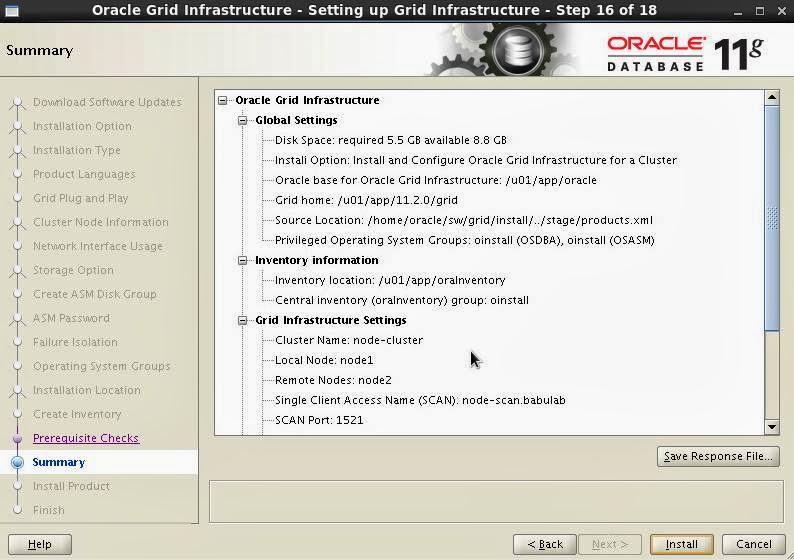

22) Review the summary and click Install:-

Installation on progress....

23) Before the install is complete, you will be prompted with below screen

Run below scripts as "root" user in the node where install is initiated from (node1.babulab) first:-

[oracle@node1 ~]$ su root

Password:

[root@node1 oracle]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@node1 oracle]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

OLR initialization - successful

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

peer user cert

pa user cert

Adding Clusterware entries to upstart

CRS-2672: Attempting to start 'ora.mdnsd' on 'node1'

CRS-2676: Start of 'ora.mdnsd' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'node1'

CRS-2676: Start of 'ora.gpnpd' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'node1'

CRS-2672: Attempting to start 'ora.gipcd' on 'node1'

CRS-2676: Start of 'ora.gipcd' on 'node1' succeeded

CRS-2676: Start of 'ora.cssdmonitor' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'node1'

CRS-2672: Attempting to start 'ora.diskmon' on 'node1'

CRS-2676: Start of 'ora.diskmon' on 'node1' succeeded

CRS-2676: Start of 'ora.cssd' on 'node1' succeeded

ASM created and started successfully.

Disk Group DSK_GRP_01 created successfully.

clscfg: -install mode specified

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

CRS-4256: Updating the profile

Successful addition of voting disk 31879bfe0a0b4fdcbfcf37cc143a6483.

Successfully replaced voting disk group with +DSK_GRP_01.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 31879bfe0a0b4fdcbfcf37cc143a6483 (/dev/oracleasm/disks/SHARED_DISK1) [DSK_GRP_01]

Located 1 voting disk(s).

CRS-2672: Attempting to start 'ora.asm' on 'node1'

CRS-2676: Start of 'ora.asm' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.DSK_GRP_01.dg' on 'node1'

CRS-2676: Start of 'ora.DSK_GRP_01.dg' on 'node1' succeeded

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

Run above scripts as "root" user in remaining nodes (node2.babulab) and click OK on the above screen

[oracle@node1 ~]$ su root

Password:

[root@node1 oracle]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@node1 oracle]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

OLR initialization - successful

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

peer user cert

pa user cert

Adding Clusterware entries to upstart

CRS-2672: Attempting to start 'ora.mdnsd' on 'node1'

CRS-2676: Start of 'ora.mdnsd' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'node1'

CRS-2676: Start of 'ora.gpnpd' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'node1'

CRS-2672: Attempting to start 'ora.gipcd' on 'node1'

CRS-2676: Start of 'ora.gipcd' on 'node1' succeeded

CRS-2676: Start of 'ora.cssdmonitor' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'node1'

CRS-2672: Attempting to start 'ora.diskmon' on 'node1'

CRS-2676: Start of 'ora.diskmon' on 'node1' succeeded

CRS-2676: Start of 'ora.cssd' on 'node1' succeeded

ASM created and started successfully.

Disk Group DSK_GRP_01 created successfully.

clscfg: -install mode specified

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

CRS-4256: Updating the profile

Successful addition of voting disk 31879bfe0a0b4fdcbfcf37cc143a6483.

Successfully replaced voting disk group with +DSK_GRP_01.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 31879bfe0a0b4fdcbfcf37cc143a6483 (/dev/oracleasm/disks/SHARED_DISK1) [DSK_GRP_01]

Located 1 voting disk(s).

CRS-2672: Attempting to start 'ora.asm' on 'node1'

CRS-2676: Start of 'ora.asm' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.DSK_GRP_01.dg' on 'node1'

CRS-2676: Start of 'ora.DSK_GRP_01.dg' on 'node1' succeeded

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

Run above scripts as "root" user in remaining nodes (node2.babulab) and click OK on the above screen

24) Installation continues to wrap up..

25) At the end of installation, you will see the below message saying Oracle Cluster Verification Utility failed. Its again the same reason which we saw previously due to SCAN resolution. Acknowledge by clicking "OK".

26) Click next

Click Yes

27) Click Close, now the Oracle 11g Grid Infrastructure install is complete.

28) Now, let us check the status of ASM / Oracle High Availability services. Log in as "root" in node1

[root@node1 oracle]# . oraenv

ORACLE_SID = [oracle] ? +ASM1

The Oracle base has been set to /u01/app/oracle

[root@node1 oracle]# crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora....P_01.dg ora....up.type ONLINE ONLINE node1

ora....ER.lsnr ora....er.type ONLINE ONLINE node1

ora....N1.lsnr ora....er.type ONLINE ONLINE node2

ora....N2.lsnr ora....er.type ONLINE ONLINE node1

ora....N3.lsnr ora....er.type ONLINE ONLINE node1

ora.asm ora.asm.type ONLINE ONLINE node1

ora.cvu ora.cvu.type ONLINE ONLINE node1

ora.gsd ora.gsd.type OFFLINE OFFLINE

ora....network ora....rk.type ONLINE ONLINE node1

ora....SM1.asm application ONLINE ONLINE node1

ora....E1.lsnr application ONLINE ONLINE node1

ora.node1.gsd application OFFLINE OFFLINE

ora.node1.ons application ONLINE ONLINE node1

ora.node1.vip ora....t1.type ONLINE ONLINE node1

ora....SM2.asm application ONLINE ONLINE node2

ora....E2.lsnr application ONLINE ONLINE node2

ora.node2.gsd application OFFLINE OFFLINE

ora.node2.ons application ONLINE ONLINE node2

ora.node2.vip ora....t1.type ONLINE ONLINE node2

ora.oc4j ora.oc4j.type ONLINE ONLINE node1

ora.ons ora.ons.type ONLINE ONLINE node1

ora.scan1.vip ora....ip.type ONLINE ONLINE node2

ora.scan2.vip ora....ip.type ONLINE ONLINE node1

ora.scan3.vip ora....ip.type ONLINE ONLINE node1

[root@node1 oracle]# crsctl status resource -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DSK_GRP_01.dg

ONLINE ONLINE node1

ONLINE ONLINE node2

ora.LISTENER.lsnr

ONLINE ONLINE node1

ONLINE ONLINE node2

ora.asm

ONLINE ONLINE node1 Started

ONLINE ONLINE node2 Started

ora.gsd

OFFLINE OFFLINE node1

OFFLINE OFFLINE node2

ora.net1.network

ONLINE ONLINE node1

ONLINE ONLINE node2

ora.ons

ONLINE ONLINE node1

ONLINE ONLINE node2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE node2

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE node1

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE node1

ora.cvu

1 ONLINE ONLINE node1

ora.node1.vip

1 ONLINE ONLINE node1

ora.node2.vip

1 ONLINE ONLINE node2

ora.oc4j

1 ONLINE ONLINE node1

ora.scan1.vip

1 ONLINE ONLINE node2

ora.scan2.vip

1 ONLINE ONLINE node1

ora.scan3.vip

1 ONLINE ONLINE node1

29) I don't want the Oracle Cluster and High Availability services to automatically start after the start of the VMs. So I am disabling the auto start:-

[root@node2 oracle]# crsctl disable crs

CRS-4621: Oracle High Availability Services autostart is disabled.

[root@node1 oracle]# . oraenv

ORACLE_SID = [oracle] ? +ASM1

The Oracle base has been set to /u01/app/oracle

[root@node1 oracle]# crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora....P_01.dg ora....up.type ONLINE ONLINE node1

ora....ER.lsnr ora....er.type ONLINE ONLINE node1

ora....N1.lsnr ora....er.type ONLINE ONLINE node2

ora....N2.lsnr ora....er.type ONLINE ONLINE node1

ora....N3.lsnr ora....er.type ONLINE ONLINE node1

ora.asm ora.asm.type ONLINE ONLINE node1

ora.cvu ora.cvu.type ONLINE ONLINE node1

ora.gsd ora.gsd.type OFFLINE OFFLINE

ora....network ora....rk.type ONLINE ONLINE node1

ora....SM1.asm application ONLINE ONLINE node1

ora....E1.lsnr application ONLINE ONLINE node1

ora.node1.gsd application OFFLINE OFFLINE

ora.node1.ons application ONLINE ONLINE node1

ora.node1.vip ora....t1.type ONLINE ONLINE node1

ora....SM2.asm application ONLINE ONLINE node2

ora....E2.lsnr application ONLINE ONLINE node2

ora.node2.gsd application OFFLINE OFFLINE

ora.node2.ons application ONLINE ONLINE node2

ora.node2.vip ora....t1.type ONLINE ONLINE node2

ora.oc4j ora.oc4j.type ONLINE ONLINE node1

ora.ons ora.ons.type ONLINE ONLINE node1

ora.scan1.vip ora....ip.type ONLINE ONLINE node2

ora.scan2.vip ora....ip.type ONLINE ONLINE node1

ora.scan3.vip ora....ip.type ONLINE ONLINE node1

[root@node1 oracle]# crsctl status resource -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DSK_GRP_01.dg

ONLINE ONLINE node1

ONLINE ONLINE node2

ora.LISTENER.lsnr

ONLINE ONLINE node1

ONLINE ONLINE node2

ora.asm

ONLINE ONLINE node1 Started

ONLINE ONLINE node2 Started

ora.gsd

OFFLINE OFFLINE node1

OFFLINE OFFLINE node2

ora.net1.network

ONLINE ONLINE node1

ONLINE ONLINE node2

ora.ons

ONLINE ONLINE node1

ONLINE ONLINE node2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE node2

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE node1

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE node1

ora.cvu

1 ONLINE ONLINE node1

ora.node1.vip

1 ONLINE ONLINE node1

ora.node2.vip

1 ONLINE ONLINE node2

ora.oc4j

1 ONLINE ONLINE node1

ora.scan1.vip

1 ONLINE ONLINE node2

ora.scan2.vip

1 ONLINE ONLINE node1

ora.scan3.vip

1 ONLINE ONLINE node1

29) I don't want the Oracle Cluster and High Availability services to automatically start after the start of the VMs. So I am disabling the auto start:-

[root@node2 oracle]# crsctl disable crs

CRS-4621: Oracle High Availability Services autostart is disabled.

30) In the "top" command, I see "ologgerd daemon" consume too much of system resources.

This daemon is spawned by RAC Cluster Health Monitor Tool, which I don't need it, so I am stopping and disabling it:-

[root@node2 oracle]# top

top - 13:12:41 up 1:05, 3 users, load average: 0.78, 0.48, 0.56

Tasks: 178 total, 1 running, 177 sleeping, 0 stopped, 0 zombie

Cpu(s): 7.8%us, 4.8%sy, 0.0%ni, 81.5%id, 5.1%wa, 0.0%hi, 0.8%si, 0.0%st

Mem: 2055064k total, 1872572k used, 182492k free, 52320k buffers

Swap: 4128764k total, 6564k used, 4122200k free, 958024k cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

2582 oracle RT 0 636m 104m 53m S 2.0 5.2 0:15.15 ocssd.bin

2696 root RT 0 626m 144m 58m S 2.0 7.2 0:10.05 ologgerd

2762 oracle -2 0 485m 14m 12m S 2.0 0.7 0:34.86 oracle

3248 oracle 20 0 336m 6448 3348 S 2.0 0.3 0:03.76 vmtoolsd

1 root 20 0 19408 1244 936 S 0.0 0.1 0:00.99 init

2 root 20 0 0 0 0 S 0.0 0.0 0:00.00 kthreadd

3 root 20 0 0 0 0 S 0.0 0.0 0:02.53 ksoftirqd/0

5 root 20 0 0 0 0 S 0.0 0.0 0:00.13 kworker/u:0

6 root RT 0 0 0 0 S 0.0 0.0 0:00.00 migration/0

7 root RT 0 0 0 0 S 0.0 0.0 0:00.29 watchdog/0

8 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 cpuset

9 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 khelper

10 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 netns

11 root 20 0 0 0 0 S 0.0 0.0 0:00.00 sync_supers

12 root 20 0 0 0 0 S 0.0 0.0 0:00.00 bdi-default

13 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 kintegrityd

14 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 kblockd

[root@node2 oracle]# crsctl stop resource ora.crf -init

CRS-2673: Attempting to stop 'ora.crf' on 'node2'

CRS-2677: Stop of 'ora.crf' on 'node2' succeeded

[root@node2 oracle]# crsctl delete resource ora.crf -init

You will have to disable it in the other nodes as well.

31) Stop the Cluster / HA services in both nodes.

[root@node1 oracle]# crsctl stop crs

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'node1'

CRS-2673: Attempting to stop 'ora.crsd' on 'node1'

CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'node1'

CRS-2673: Attempting to stop 'ora.LISTENER_SCAN3.lsnr' on 'node1'

CRS-2673: Attempting to stop 'ora.oc4j' on 'node1'

CRS-2673: Attempting to stop 'ora.cvu' on 'node1'

CRS-2673: Attempting to stop 'ora.LISTENER_SCAN2.lsnr' on 'node1'

CRS-2673: Attempting to stop 'ora.DSK_GRP_01.dg' on 'node1'

CRS-2673: Attempting to stop 'ora.LISTENER.lsnr' on 'node1'

CRS-2677: Stop of 'ora.LISTENER_SCAN2.lsnr' on 'node1' succeeded

CRS-2673: Attempting to stop 'ora.scan2.vip' on 'node1'

CRS-2677: Stop of 'ora.LISTENER.lsnr' on 'node1' succeeded

CRS-2673: Attempting to stop 'ora.node1.vip' on 'node1'

CRS-2677: Stop of 'ora.LISTENER_SCAN3.lsnr' on 'node1' succeeded

CRS-2673: Attempting to stop 'ora.scan3.vip' on 'node1'

CRS-2677: Stop of 'ora.node1.vip' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.node1.vip' on 'node2'

CRS-2677: Stop of 'ora.scan3.vip' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.scan3.vip' on 'node2'

CRS-2677: Stop of 'ora.scan2.vip' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.scan2.vip' on 'node2'

CRS-2676: Start of 'ora.node1.vip' on 'node2' succeeded

CRS-2676: Start of 'ora.scan3.vip' on 'node2' succeeded

CRS-2672: Attempting to start 'ora.LISTENER_SCAN3.lsnr' on 'node2'

CRS-2676: Start of 'ora.scan2.vip' on 'node2' succeeded

CRS-2672: Attempting to start 'ora.LISTENER_SCAN2.lsnr' on 'node2'

CRS-2676: Start of 'ora.LISTENER_SCAN3.lsnr' on 'node2' succeeded

CRS-2676: Start of 'ora.LISTENER_SCAN2.lsnr' on 'node2' succeeded

CRS-2677: Stop of 'ora.oc4j' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.oc4j' on 'node2'

CRS-2677: Stop of 'ora.cvu' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.cvu' on 'node2'

CRS-2676: Start of 'ora.cvu' on 'node2' succeeded

CRS-2676: Start of 'ora.oc4j' on 'node2' succeeded

CRS-2677: Stop of 'ora.DSK_GRP_01.dg' on 'node1' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'node1'

CRS-2677: Stop of 'ora.asm' on 'node1' succeeded

CRS-2673: Attempting to stop 'ora.ons' on 'node1'

CRS-2677: Stop of 'ora.ons' on 'node1' succeeded

CRS-2673: Attempting to stop 'ora.net1.network' on 'node1'

CRS-2677: Stop of 'ora.net1.network' on 'node1' succeeded

CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'node1' has completed

CRS-2677: Stop of 'ora.crsd' on 'node1' succeeded

CRS-2673: Attempting to stop 'ora.mdnsd' on 'node1'

CRS-2673: Attempting to stop 'ora.ctssd' on 'node1'

CRS-2673: Attempting to stop 'ora.evmd' on 'node1'

CRS-2673: Attempting to stop 'ora.asm' on 'node1'

CRS-2677: Stop of 'ora.mdnsd' on 'node1' succeeded

CRS-2677: Stop of 'ora.evmd' on 'node1' succeeded

CRS-2677: Stop of 'ora.asm' on 'node1' succeeded

CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'node1'

CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'node1' succeeded

CRS-2677: Stop of 'ora.ctssd' on 'node1' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'node1'

CRS-2677: Stop of 'ora.cssd' on 'node1' succeeded

CRS-2673: Attempting to stop 'ora.crf' on 'node1'

CRS-2677: Stop of 'ora.crf' on 'node1' succeeded

CRS-2673: Attempting to stop 'ora.gipcd' on 'node1'

CRS-2677: Stop of 'ora.gipcd' on 'node1' succeeded

CRS-2673: Attempting to stop 'ora.gpnpd' on 'node1'

CRS-2677: Stop of 'ora.gpnpd' on 'node1' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'node1' has completed

CRS-4133: Oracle High Availability Services has been stopped.

32) Remove the grid software files (that we used for the install) to free up space.

$ cd /home/oracle/sw

$ rm -rf grid

33) Next step is to add a hard disk in node1(optional step) and install Oracle 11g RAC database.

No comments:

Post a Comment