Migrate Oracle database from On-Premises to Autonomous Database (ADB) Cloud using Oracle Data Pump

Objective: Migrate a schema from On-Premises Oracle Database to Oracle Autonomous Databases on Cloud using Data Pump

Environments:

Destination DB: Oracle Autonomous Databases (ADB) on Cloud

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production [Version 19.5.0.0.0]

Transaction Processing / Shared Infrastructure / Always Free configuration

Source DB: On-Premises, Windows 10, 64 Bit.

Oracle Database 18c Express Edition Release 18.0.0.0.0 - Production [Version 18.4.0.0.0]

I have already configured Oracle Net from my PC to connect to the ADB as per my previous post.

Step 1: Create and prepare sample schema at source DB

Create a user called "BABUR" and some sample objects, so we can migrate this schema.

@> conn sys/xxxxxxxx@XE as sysdba

Connected.

SYS@XE> ALTER SESSION SET CONTAINER = SDEDB;

Session altered.

create user BABUR identified by xxxxxxxx

quota UNLIMITED on USERTBS1

default tablespace USERTBS1

CONTAINER=CURRENT;

GRANT

CREATE VIEW,

CREATE TABLE,

CREATE SESSION,

CREATE TRIGGER,

CREATE SEQUENCE

to BABUR;

CREATE DIRECTORY DATA_PUMP_DIR_SDE as 'C:\tmp\ADB_Exp';

GRANT READ,WRITE ON DIRECTORY DATA_PUMP_DIR_SDE TO BABUR;

CONN BABUR/xxxxxxxx@SDEDB

CREATE TABLE TEST_TAB (A NUMBER);

BEGIN

FOR X IN 1..100 LOOP

INSERT INTO TEST_TAB VALUES (X);

END LOOP;

COMMIT;

END;

/

CREATE INDEX TEST_IDX ON TEST_TAB(A);

CREATE VIEW TEST_VIEW AS SELECT * FROM TEST_TAB WHERE A > 50;

select object_name,object_type from user_objects order by 1;

OBJECT_NAME OBJECT_TYPE

------------------------------ -----------------------

TEST_IDX INDEX

TEST_TAB TABLE

TEST_VIEW VIEW

Step 2: Export the Schema using Oracle Data Pump

Created an export parameter file called "BABUR_DP_PAR_EXP.par" with below content:-

schemas=BABUR

directory=DATA_PUMP_DIR_SDE

dumpfile=BABUR.dmp

logfile=BABUR_DP_PAR_EXP.log

exclude=GRANT,STATISTICS,SYSTEM_GRANT,ROLE_GRANT,DEFAULT_ROLE,TABLESPACE_QUOTA,USER,PRE_SCHEMA

Run the data pump export using the parameter file:-

expdp "BABUR/xxxxxx@SDEDB" parfile=C:\tmp\ADB_Exp\BABUR_DP_PAR_EXP.par

Step 3: Upload the exported dump file.

In the Oracle Cloud Infrastructure Console, create a Bucket in the Object Storage and upload the dump file within that bucket:-

I have created a bucket called "BUCKETB", uploaded the exported dump file "BABUR.dmp" in the bucket and get the Object Details.

Note down the URL Path (URI), we will use it to run the data pump import:-

https://objectstorage.us-ashburn-1.oraclecloud.com/n/id0yw/b/BUCKETB/o/BABUR.DMP

Step 4: Generate Token

Auth Tokens are Oracle-generated token strings that is associated with Oracle Cloud Infrastructure username. We will create credential object with the Auth Token strings as password. The credential object will be used to run Oracle data pump import.

In the Oracle Cloud Infrastructure Console, Go to Identity --> Users --> your username (the one that is not federated)

On the left hand side, click on “Auth Tokens”. Create a new token by click on “Generate Token”.

Note down the random generated value, which will be used as credential password in DBMS_CLOUD. Lets say, mine is "My_Token_Password"

Step 5: Create Credential Object at ADB

conn admin/xxxxxxxx@tp01_low

ADMIN@tp01_low> select * from USER_CREDENTIALS;

no rows selected

BEGIN

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'BABU_CRED',

username => 'baburxxxxxy@hotmail.com',

password => 'My_Token_Password'

);

END;

/

COL CREDENTIAL_NAME FOR A15

COL ENABLED FOR A10

SET LINES 150

select CREDENTIAL_NAME,ENABLED from USER_CREDENTIALS;

CREDENTIAL_NAME ENABLED

--------------- ----------

BABU_CRED TRUE

Step 6: Create DB User at Destination (ADB)

Create a user called "DABUR"

create user DABUR identified by xxxxxxxxx quota 100m on DATA;

GRANT

CREATE VIEW,

CREATE TABLE,

CREATE SESSION,

CREATE TRIGGER,

CREATE SEQUENCE

to DABUR;

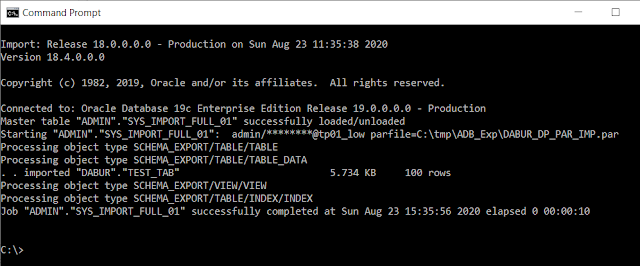

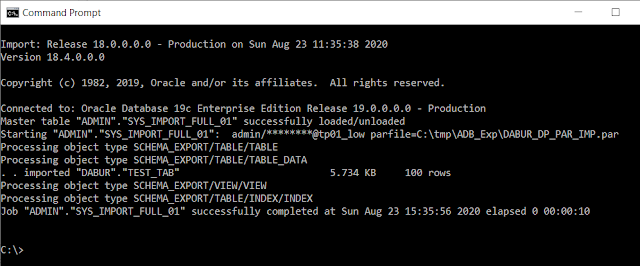

Step 7: Run Data Pump import as ADB admin user.

The data pump import can be run from any external environment where Oracle client and ADB connections are configured and compatible. I am running this from the source database environment.

Created an import parameter file called "BABUR_DP_PAR_IMP.par" with below content:-

remap_schema=BABUR:DABUR

remap_tablespace=USERTBS1:DATA

directory=data_pump_dir

credential=BABU_CRED

dumpfile=https://objectstorage.us-ashburn-1.oraclecloud.com/n/id0yw/b/BUCKETB/o/BABUR.DMP

logfile=DABUR_DP_IMP.log

Run the data pump import using the parameter file:-

impdp "admin/xxxx@tp01_low" parfile=C:\tmp\ADB_Exp\DABUR_DP_PAR_IMP.par

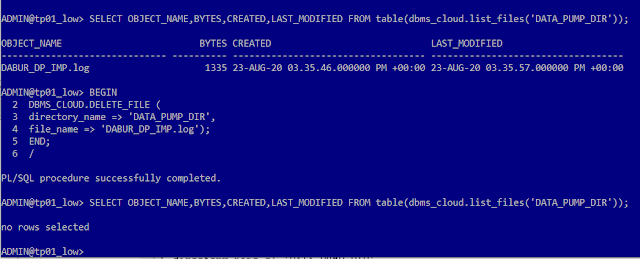

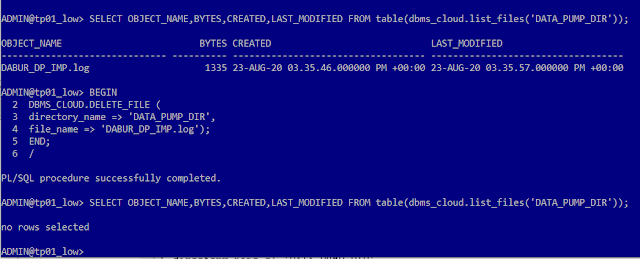

Step 8: Verify the import and Clean up

Check object counts at the destination:-

select object_name,object_type from dba_objects where owner = 'DABUR' order by 1;

OBJECT_NAME OBJECT_TYPE

------------------------------ -----------------------

TEST_IDX INDEX

TEST_TAB TABLE

TEST_VIEW VIEW

Apparently this simple schema import ran smooth with no errors, but if there were errors that needed to be addressed or recompiled or recreated, you may want to review the (import) log file, which is stored in the ADB server at the "data_pump_dir" directory. Let us see how to retrieve it and later delete it from the ADB server.

Copy the import log from ADB server to cloud Object Storage

col CREATED for a35

col LAST_MODIFIED for a35

set lines 150

SELECT OBJECT_NAME,BYTES,CREATED,LAST_MODIFIED FROM table(dbms_cloud.list_files('DATA_PUMP_DIR'));

OBJECT_NAME BYTES CREATED LAST_MODIFIED

------------------------------ ---------- ----------------------------------- -----------------------------------

DABUR_DP_IMP.log 1335 23-AUG-20 03.35.46.000000 PM +00:00 23-AUG-20 03.35.57.000000 PM +00:00

BEGIN

DBMS_CLOUD.PUT_OBJECT(

credential_name => 'BABU_CRED',

object_uri => 'https://objectstorage.us-ashburn-1.oraclecloud.com/n/id0yw/b/BUCKETB/o/DABUR_DP_IMP_COPY.log',

directory_name => 'DATA_PUMP_DIR',

file_name => 'DABUR_DP_IMP.log');

END;

/

Now you will see the import logfile copy in the Object Storage, which you can download to your PC and examine it.

Once copied to Object Storage, you can delete it from the ADB server / directory:-

BEGIN

DBMS_CLOUD.DELETE_FILE (

directory_name => 'DATA_PUMP_DIR',

file_name => 'DABUR_DP_IMP.log');

END;

/

Here we saw how to migrate a schema from an On-Premises Oracle Database to Oracle Autonomous Databases on Cloud using Oracle Data Pump.

Hope it helps, appreciate your feedback, thanks.